系统准备

PS:本文中所有操作都是在国内正常网络下进行的,也就是没有使用任何魔法网络的情况下,可以放心食用 首先安装一台纯净CentosLinux,配置好之后用于克隆

去阿里云开源镜像站选择合适版本下载,然后安装centos7.9

如果是mac平台,使用Parallels Desktop进行安装,需要在[图形-高级]中关闭3D图形加速和始终使用高性能图形,否则无法进入live os

安装完成之后开始做系统配置

配置yum源

curl -o /etc/yum.repos.d/CentOS-Base.repo 'http://mirrors.cloud.tencent.com/repo/centos7_base.repo'

curl -L -o /etc/yum.repos.d/CentOS-Epel.repo 'http://mirrors.cloud.tencent.com/repo/epel-7.repo'

更新系统

yum makecache && yum update -y

升级内核到5.4

yum install -y wget unzip vim

wget http://mirrors.coreix.net/elrepo-archive-archive/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.203-1.el7.elrepo.x86_64.rpm

wget http://mirrors.coreix.net/elrepo-archive-archive/kernel/el7/x86_64/RPMS/kernel-lt-headers-5.4.203-1.el7.elrepo.x86_64.rpm

wget http://mirrors.coreix.net/elrepo-archive-archive/kernel/el7/x86_64/RPMS/kernel-lt-5.4.203-1.el7.elrepo.x86_64.rpm

rpm -Uvh *.rpm

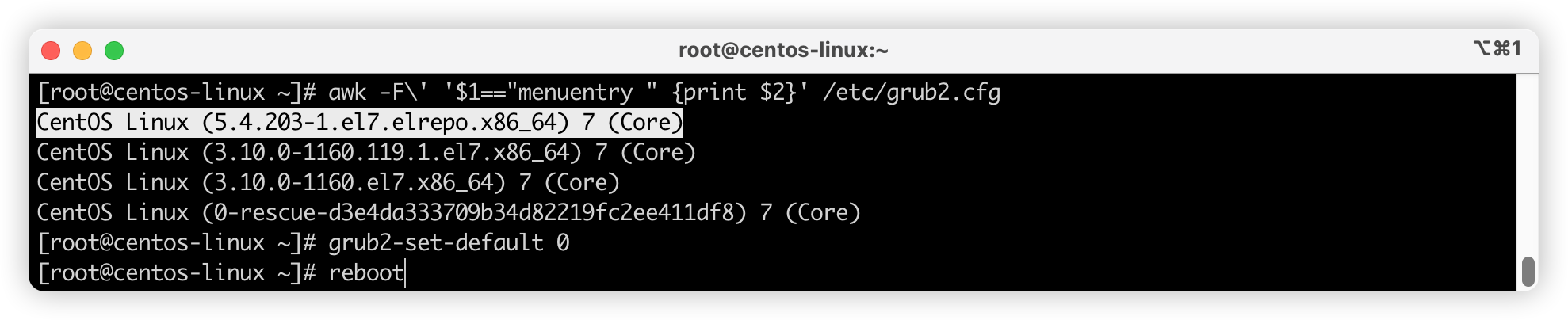

查看已安装的内核并切换

[root@centos-linux ~]# awk -F\' '$1=="menuentry " {print $2}' /etc/grub2.cfg

CentOS Linux (5.4.203-1.el7.elrepo.x86_64) 7 (Core)

CentOS Linux (3.10.0-1160.119.1.el7.x86_64) 7 (Core)

CentOS Linux (3.10.0-1160.el7.x86_64) 7 (Core)

CentOS Linux (0-rescue-d3e4da333709b34d82219fc2ee411df8) 7 (Core)

[root@centos-linux ~]# grub2-set-default 0

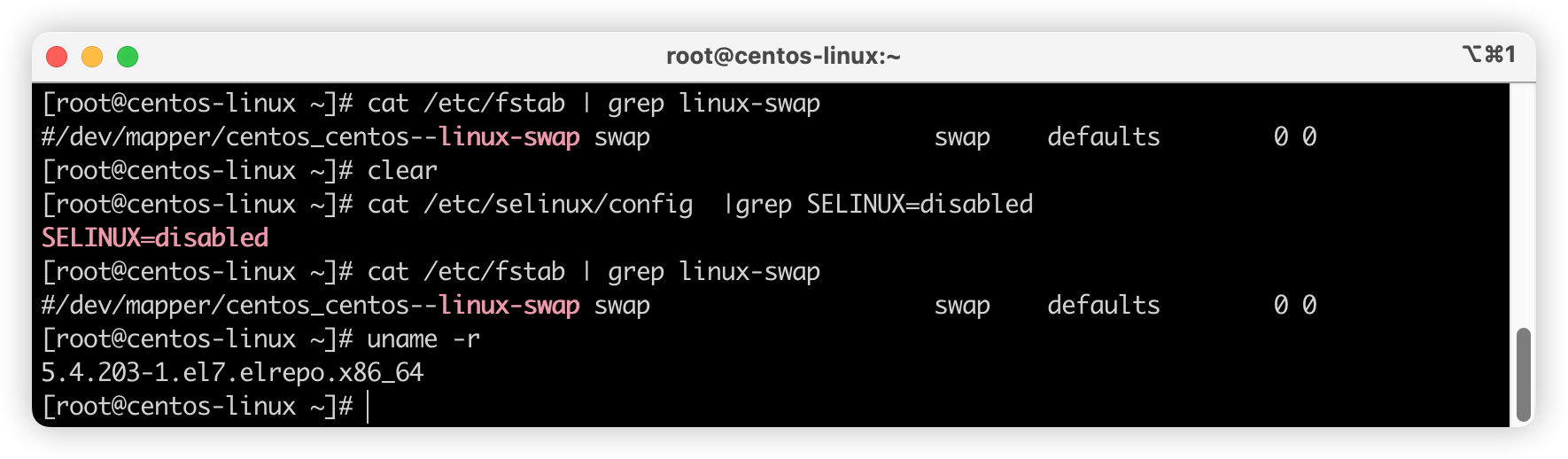

永久关闭selinux和swap

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

sed -i 's/.*swap.*/#&/g' /etc/fstab

关闭防火墙,清空iptables规则

systemctl disable firewalld && systemctl stop firewalld

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X && iptables -P FORWARD ACCEPT

关闭NetworkManager

systemctl disable NetworkManager && systemctl stop NetworkManager

加载IPVS模块

yum -y install ipset ipvsadm

mkdir -p /etc/sysconfig/modules

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

modprobe -- nf_conntrack

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack

开启br_netfilter、ipv4 路由转发

# 开启br_netfilter、ipv4 路由转发

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

sudo sysctl --system

# 查看是否生效

lsmod | grep br_netfilter

lsmod | grep overlay

sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward

内核调优

cat > /etc/sysctl.d/99-sysctl.conf << 'EOF'

# sysctl settings are defined through files in

# /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/.

#

# Vendors settings live in /usr/lib/sysctl.d/.

# To override a whole file, create a new file with the same in

# /etc/sysctl.d/ and put new settings there. To override

# only specific settings, add a file with a lexically later

# name in /etc/sysctl.d/ and put new settings there.

#

# For more information, see sysctl.conf(5) and sysctl.d(5).

# Controls IP packet forwarding

# Controls source route verification

net.ipv4.conf.default.rp_filter = 1

# Do not accept source routing

net.ipv4.conf.default.accept_source_route = 0

# Controls the System Request debugging functionality of the kernel

# Controls whether core dumps will append the PID to the core filename.

# Useful for debugging multi-threaded applications.

kernel.core_uses_pid = 1

# Controls the use of TCP syncookies

net.ipv4.tcp_syncookies = 1

# Controls the maximum size of a message, in bytes

kernel.msgmnb = 65536

# Controls the default maxmimum size of a mesage queue

kernel.msgmax = 65536

net.ipv4.conf.all.promote_secondaries = 1

net.ipv4.conf.default.promote_secondaries = 1

net.ipv6.neigh.default.gc_thresh3 = 4096

kernel.sysrq = 1

net.ipv6.conf.all.disable_ipv6=0

net.ipv6.conf.default.disable_ipv6=0

net.ipv6.conf.lo.disable_ipv6=0

kernel.numa_balancing = 0

kernel.shmmax = 68719476736

kernel.printk = 5

net.core.rps_sock_flow_entries=8192

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_local_reserved_ports=60001,60002

net.core.rmem_max=16777216

fs.inotify.max_user_watches=524288

kernel.core_pattern=core

net.core.dev_weight_tx_bias=1

net.ipv4.tcp_max_orphans=32768

kernel.pid_max=4194304

kernel.softlockup_panic=1

fs.file-max=3355443

net.core.bpf_jit_harden=1

net.ipv4.tcp_max_tw_buckets=32768

fs.inotify.max_user_instances=8192

net.core.bpf_jit_kallsyms=1

vm.max_map_count=262144

kernel.threads-max=262144

net.core.bpf_jit_enable=1

net.ipv4.tcp_keepalive_time=600

net.ipv4.tcp_wmem=4096 12582912 16777216

net.core.wmem_max=16777216

net.ipv4.neigh.default.gc_thresh1=2048

net.core.somaxconn=32768

net.ipv4.neigh.default.gc_thresh3=8192

net.ipv4.ip_forward=1

net.ipv4.neigh.default.gc_thresh2=4096

net.ipv4.tcp_max_syn_backlog=8096

net.bridge.bridge-nf-call-iptables=1

net.ipv4.tcp_rmem=4096 12582912 16777216

EOF

# 应用 sysctl 参数而不重新启动

sudo sysctl --system

重启系统

集群机器准备

克隆三台机器(1Master+2Worker)

克隆完成之后: 我的三台机器ip是这样

| 节点角色 | ip |

|---|---|

| k8s-master | 10.211.55.13 |

| k8s-worker1 | 10.211.55.14 |

| K8s-worker2 | 10.211.55.15 |

配置host(所有机器)

cat >> /etc/hosts << EOF

10.211.55.13 k8s-master

10.211.55.14 k8s-worker1

10.211.55.15 k8s-worker2

EOF

集群安装

安装containerd-1.6.31(所有节点)

wget -O /etc/yum.repos.d/docker-ce.repo https://download.docker.com/linux/centos/docker-ce.repo

yum makecache

yum -y install containerd.io-1.6.31

配置containerd

containerd config default | sudo tee /etc/containerd/config.toml

# 修改cgroup Driver为systemd

sed -ri 's#SystemdCgroup = false#SystemdCgroup = true#' /etc/containerd/config.toml

# 更改sandbox_image

sed -ri 's#registry.k8s.io\/pause:3.6#registry.aliyuncs.com\/google_containers\/pause:3.9#' /etc/containerd/config.toml

# docker.io 镜像加速

mkdir -p /etc/containerd/certs.d/docker.io

cat > /etc/containerd/certs.d/docker.io/hosts.toml << 'EOF'

server = "https://docker.io" # 源镜像地址

[host."https://docker.m.daocloud.io"] # 道客-镜像加速地址

capabilities = ["pull","resolve"]

[host."https://dockerproxy.com"] # 镜像加速地址

capabilities = ["pull", "resolve"]

[host."https://docker.mirrors.sjtug.sjtu.edu.cn"] # 上海交大-镜像加速地址

capabilities = ["pull","resolve"]

[host."https://docker.mirrors.ustc.edu.cn"] # 中科大-镜像加速地址

capabilities = ["pull","resolve"]

[host."https://docker.nju.edu.cn"] # 南京大学-镜像加速地址

capabilities = ["pull","resolve"]

[host."https://registry-1.docker.io"]

capabilities = ["pull","resolve","push"]

EOF

# registry.k8s.io 镜像加速

mkdir -p /etc/containerd/certs.d/registry.k8s.io

cat > /etc/containerd/certs.d/registry.k8s.io/hosts.toml << 'EOF'

server = "https://registry.k8s.io"

[host."https://k8s.m.daocloud.io"]

capabilities = ["pull", "resolve", "push"]

EOF

# quay.io 镜像加速

mkdir -p /etc/containerd/certs.d/quay.io

cat > /etc/containerd/certs.d/quay.io/hosts.toml << 'EOF'

server = "https://quay.io"

[host."https://quay.m.daocloud.io"]

capabilities = ["pull", "resolve", "push"]

EOF

# docker.elastic.co镜像加速

mkdir -p /etc/containerd/certs.d/docker.elastic.co

tee /etc/containerd/certs.d/docker.elastic.co/hosts.toml << 'EOF'

server = "https://docker.elastic.co"

[host."https://elastic.m.daocloud.io"]

capabilities = ["pull", "resolve", "push"]

EOF

systemctl daemon-reload

systemctl enable containerd --now

systemctl restart containerd

systemctl status containerd

设置crictl (containerd命令行工具)

#设置crictl

cat << EOF >> /etc/crictl.yaml

runtime-endpoint: unix:///var/run/containerd/containerd.sock

image-endpoint: unix:///var/run/containerd/containerd.sock

timeout: 10

debug: false

EOF

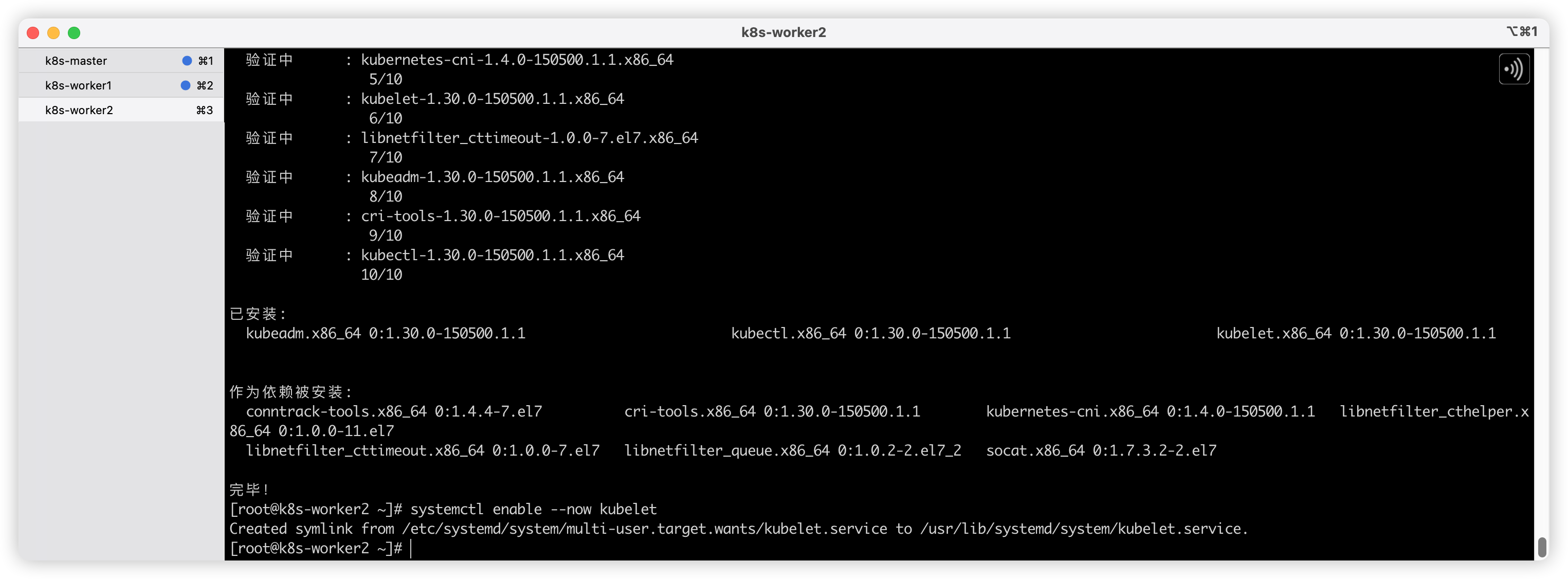

安装kubeadm kubelet kubectl(所有节点),使用清华源

cat > /etc/yum.repos.d/kubernetes.repo << 'EOF'

[kubernetes]

name=kubernetes

baseurl=https://mirrors.tuna.tsinghua.edu.cn/kubernetes/yum/repos/kubernetes-el7-$basearch

name=Kubernetes

baseurl=https://mirrors.tuna.tsinghua.edu.cn/kubernetes/core:/stable:/v1.30/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.30/rpm/repodata/repomd.xml.key

EOF

yum makecache

yum -y install kubeadm-1.30.0 kubelet-1.30.0 kubectl-1.30.0

systemctl enable --now kubelet

初始化master节点

mkdir ~/kubeadm_init && cd ~/kubeadm_init

kubeadm config print init-defaults > kubeadm-init.yaml

注意将localAPIEndpoint.advertiseAddress的值修改为master主节点的ip

cat > ~/kubeadm_init/kubeadm-init.yaml << EOF

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.211.55.13

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s-master

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/k8s-master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.30.0

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

EOF

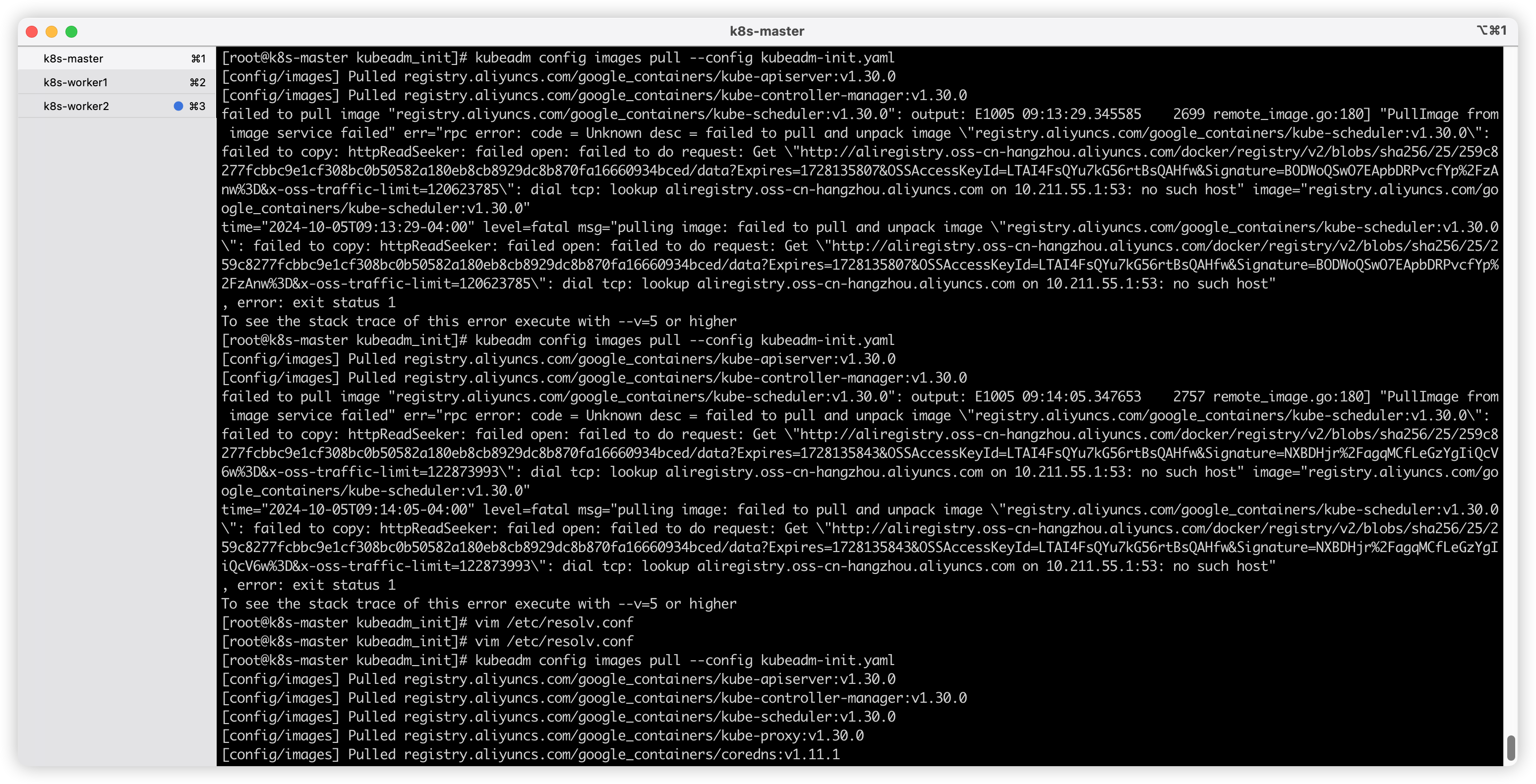

查看需要下载的镜像,并预拉取镜像

kubeadm config images list --config kubeadm-init.yaml

kubeadm config images pull --config kubeadm-init.yaml

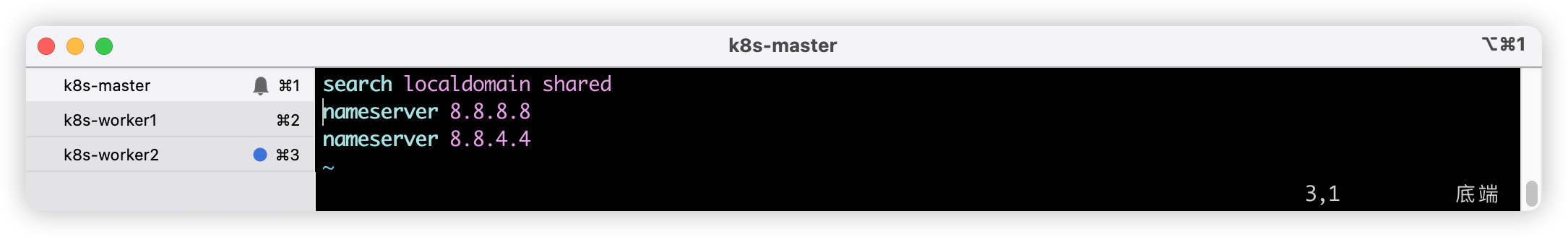

如果出现类似拉取错误,修改一下DNS(/etc/resolv.conf)即可,将本地网关解析删除即可,最好所有节点都修改一下

修改后的DNS解析记录

根据kubeadm-init.yaml配置文件执行主节点初始化

kubeadm init --config=kubeadm-init.yaml | tee kubeadm-init.log

如果提前拉取了镜像,主节点几十秒便可以初始化成功

初始化完成后续动作

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

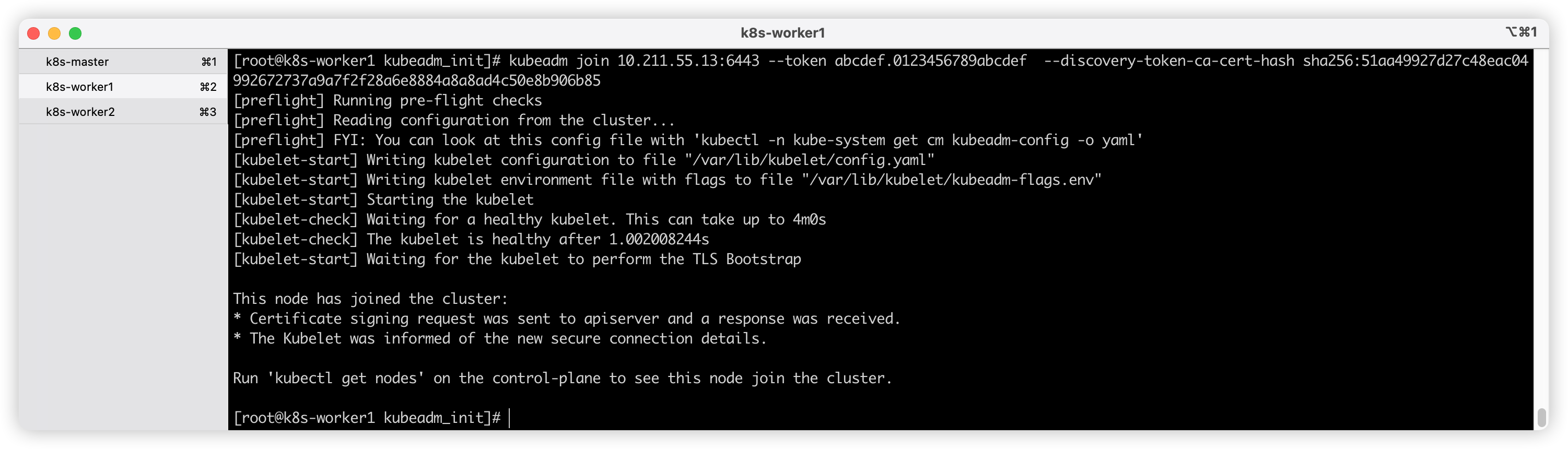

从节点加入集群(根据安装成功后的提示)

kubeadm join 10.211.55.13:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:51aa49927d27c48eac04992672737a9a7f2f28a6e8884a8a8ad4c50e8b906b85

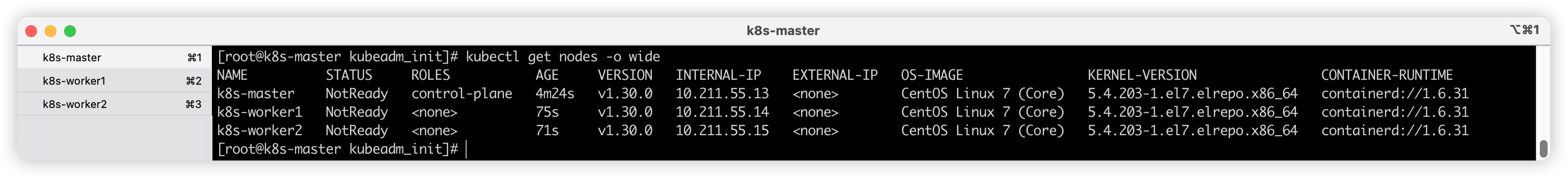

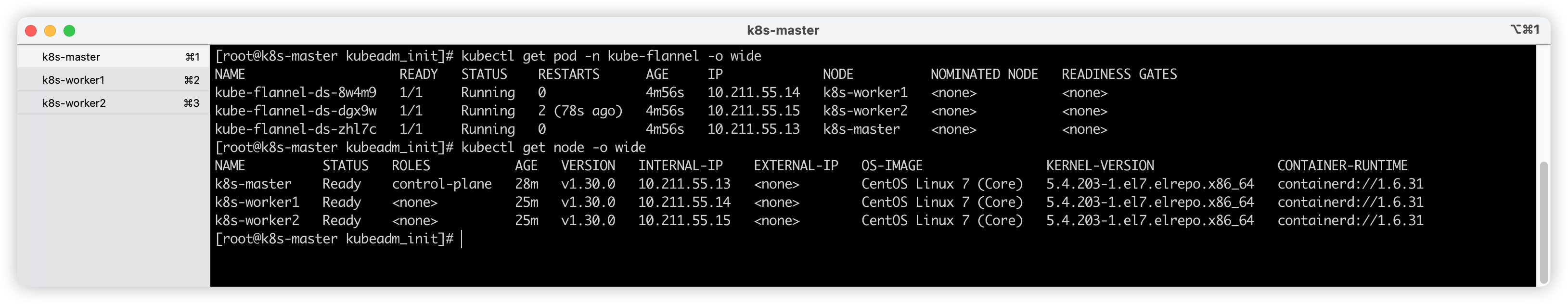

所有从节点都加入集群之后再在master查看所有节点情况(此时应该所有节点都已加入,但是都处于NotReady状态)

安装网络插件

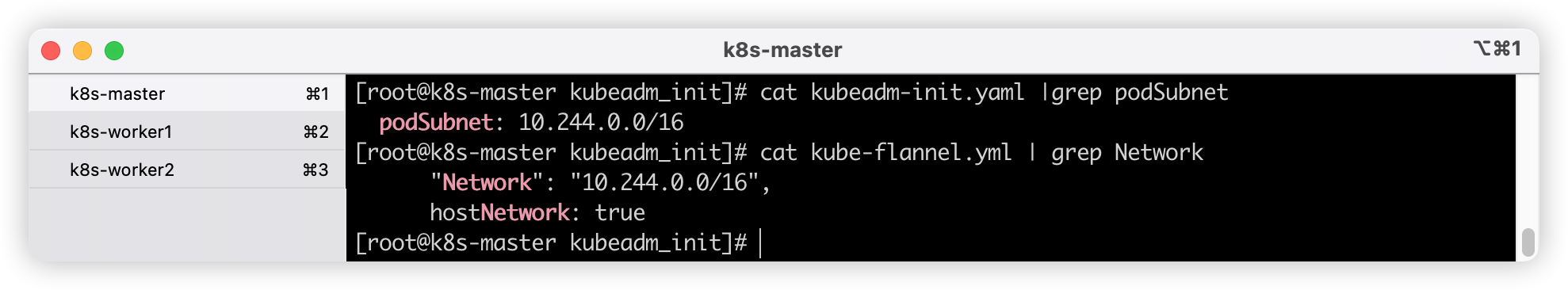

我采用的是flannel网络插件,本来想用calico,但是calico的镜像在正常网络下实在无法拉取,然后尝试了flannel,flannel镜像拉取虽然慢,但是好在还能正常拉取

wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

由于flannel的默认网段为10.244.0.0/16 这和我的集群配置kubeadm-init.yaml中的podSubnet网段一致,所以不用修改任何内容,如果在初始化master节点时调整了podSubnet的网段,需要同时修改kube-flannel配置文件中的net-conf.json的Network值与集群的podSubnet值一致

在master节点安装flannel

kubectl apply -f kube-flannel.yml

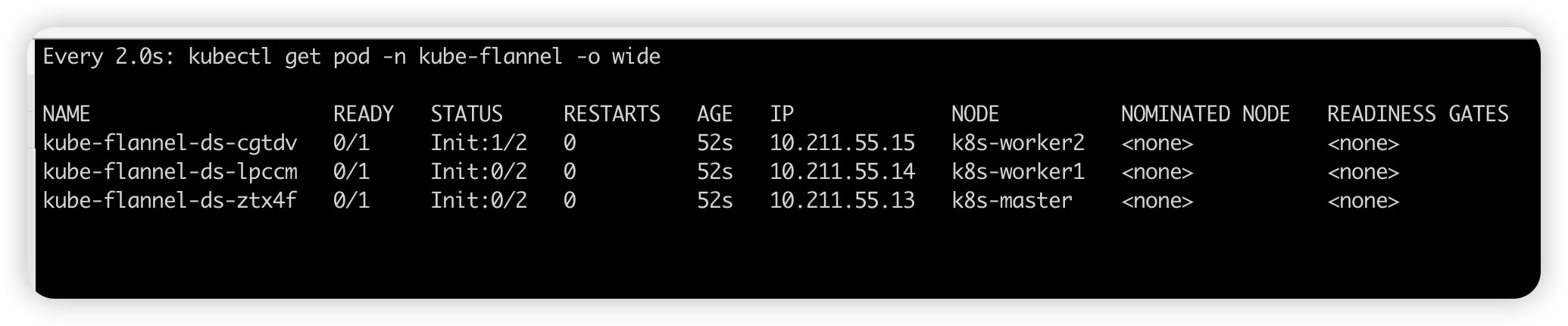

等待所有节点就绪,如果某个pod运行失败,将该节点的DNS配置修改为与master一致即可

等待命名空间kube-flannel的所有pod处于running状态,此时集群安装完成

安装控制面板

个人采用kuboard的方式安装集群控制面板,既可以集成在Kubernetes集群中,又可以独立于Kubernetes集群安装

本次采用在宿主机使用Docker方式安装,独立于三台虚拟机的Kubernetes集群

configs:

create_db_sql:

content: |

CREATE DATABASE kuboard DEFAULT CHARACTER SET = 'utf8mb4' DEFAULT COLLATE = 'utf8mb4_unicode_ci';

create user 'kuboard'@'%' identified by 'kuboardpwd';

grant all privileges on kuboard.* to 'kuboard'@'%';

FLUSH PRIVILEGES;

services:

db:

image: swr.cn-east-2.myhuaweicloud.com/kuboard/mariadb:11.3.2-jammy

# image: mariadb:11.3.2-jammy

# swr.cn-east-2.myhuaweicloud.com/kuboard/mariadb:11.3.2-jammy 与 mariadb:11.3.2-jammy 镜像完全一致

environment:

MARIADB_ROOT_PASSWORD: kuboardpwd

MYSQL_ROOT_PASSWORD: kuboardpwd

TZ: Asia/Shanghai

volumes:

- ./kuboard-mariadb-data:/var/lib/mysql:Z

configs:

- source: create_db_sql

target: /docker-entrypoint-initdb.d/create_db.sql

mode: 0777

networks:

kuboard_v4_dev:

aliases:

- db

kuboard:

image: swr.cn-east-2.myhuaweicloud.com/kuboard/kuboard:v4

# image: eipwork/kuboard:v4

environment:

- DB_DRIVER=org.mariadb.jdbc.Driver

- DB_URL=jdbc:mariadb://db:3306/kuboard?serverTimezone=Asia/Shanghai

- DB_USERNAME=kuboard

- DB_PASSWORD=kuboardpwd

ports:

- '8000:80'

depends_on:

- db

networks:

kuboard_v4_dev:

aliases:

- kuboard

networks:

kuboard_v4_dev:

driver: bridge

docker compose up -d

容器成功运行之后访问 http://宿主机ip:8080, 登录密码 admin/Kuboard123

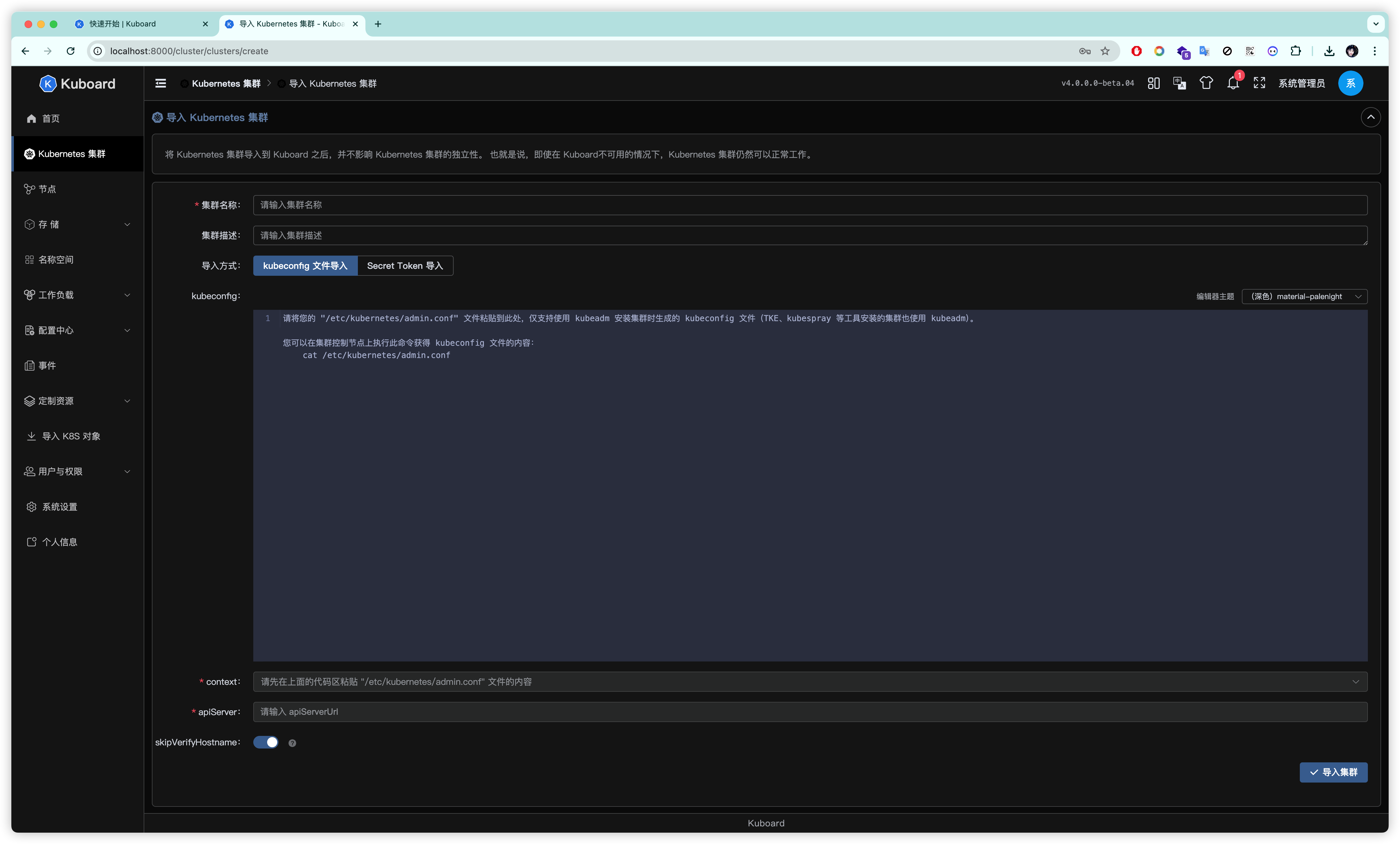

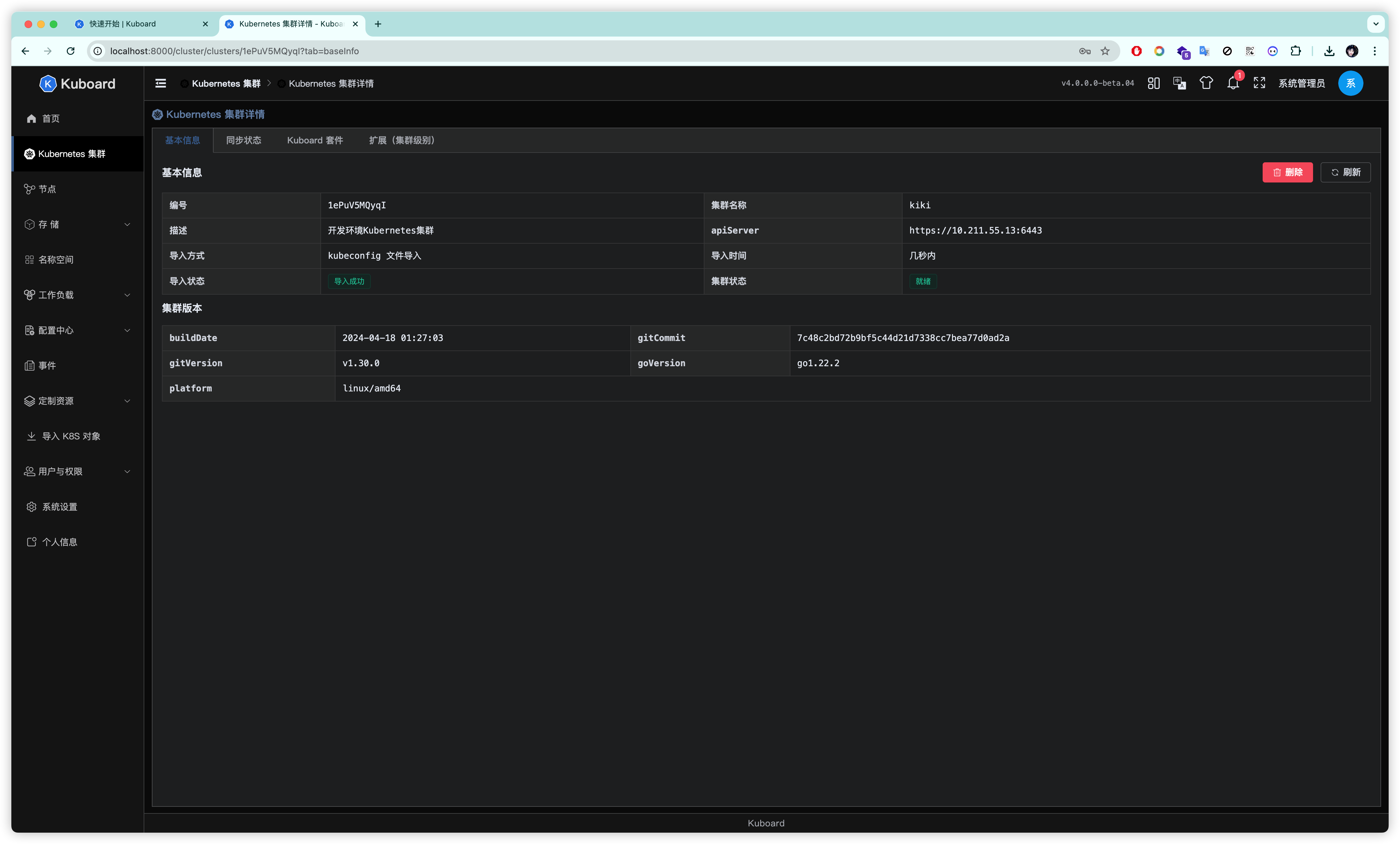

导入Kubernetes集群

在master节点获取kubeconfig内容

cat /etc/kubernetes/admin.conf

将内容拷贝到Kuboard中执行集群导入即可

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lJQnREekMxOTlEYzh3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TkRFd01EVXhNekUyTXpsYUZ3MHpOREV3TURNeE16SXhNemxhTUJVeApFekFSQmdOVkJBTVRDbXQxWW1WeWJtVjBaWE13Z2dFaU1BMEdDU3FHU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLCkFvSUJBUURBVUQ1SnJycW5DcW5GU0VUY3BkeEQrOExWU0dCM3lTWXpuWTZWdFZOV2ptTy9FQi96SXJlRjdEdEUKSlhJTCsyWjJCNkxEM0xLZzJNV2E5Mk5lYmlaa0hnRDNTQkw3NXlSKytrSUR0aENCYlRZbk9jMk9HalZpYUNacwp3L0o1Zk0rNXNscy9wNVNZY2M4a3V5Zy9YT0hJZFNKdmtFOXV4eEpqQXZWVUpVanROK293L0lJaW03TjkzUjEvCi9pcURJdEdkSThET0cwd25xUEpFWC9EeGNjL2Jmaks0WVZaWW9HNzQ5ejM1MCt1L3NMZGQ4QU56dnRXRUUwVnoKeUxMS3lialZ1V0Y5NVpDUW14Qy9PTEVKL2JtZWVEb0FoUmJ2VDZoTGlxNHdjS1pjSURkRHZxREJMQlkxczB6agp5OHlpRmYrcGJPU0V6YSt1cFROQ0VDemlVdWZCQWdNQkFBR2pXVEJYTUE0R0ExVWREd0VCL3dRRUF3SUNwREFQCkJnTlZIUk1CQWY4RUJUQURBUUgvTUIwR0ExVWREZ1FXQkJTWFVUNG1OekQ1SWZHbFVRaTZHVmxkS3g0aWlqQVYKQmdOVkhSRUVEakFNZ2dwcmRXSmxjbTVsZEdWek1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQ25tVjd0bzBvKwpNdjVESFd6VjJmYWprL3ZtU3ZVbCtqcnF0YmEvTlFKTHowYTdrR2wxZk1lMFE1ZXJxNXUvYTF6bjNTSUkxQVpHCmx6WXdrNExFblBleUt4aVpyU1pNbzlEY2lSK2tBWGNSckp2UHQyUzVaRVF6R25JSGNWS2lrN2VPZG16WjhhYWQKbmg0OGUwS0lDVjFSUXVlazlzWXptQkVZUlQrWUdsNHlkMmpXQmlIdTRleFhDTGxieitFdUlIR29oRFhzRllnQQplRlh6MkdwdGV1a01FN0VPcDdWdFhod2wwYnB6b21Pb0dYbWpiWXNwY0h2cVVvU0kzMEpYRytGRU5QUjJVZUd5CkxZQWliMFA3TGNBRXZLSWRlNGwzSnhMdDc4dDBLT3ZtNlhyc3RvSmhmWXkzMjhPdVJxTzh6U0pLUXlVQjdUZHYKUWMwZWVrL21JSjdLCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

server: https://10.211.55.13:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURLVENDQWhHZ0F3SUJBZ0lJS0VmRktkSDRrdkl3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TkRFd01EVXhNekUyTXpsYUZ3MHlOVEV3TURVeE16SXhOREJhTUR3eApIekFkQmdOVkJBb1RGbXQxWW1WaFpHMDZZMngxYzNSbGNpMWhaRzFwYm5NeEdUQVhCZ05WQkFNVEVHdDFZbVZ5CmJtVjBaWE10WVdSdGFXNHdnZ0VpTUEwR0NTcUdTSWIzRFFFQkFRVUFBNElCRHdBd2dnRUtBb0lCQVFDMU8xNm0KQW1GNzc3RXZZMC9RMUY1VG84WlRId3pQYnpQZmI0UTVRRXB5d0M5K1FuM2VnSVFkQ09ZWTU3Y2d6cWllVzE2eQo2ZTFkY2Q2akFlWm9VN2RjYjlDbERYRmVEWEdFVGRueVA3TnpTTmZtekZWbko5bGZFM2tYME1jRTZOb0dUYmYvCld0MFc4VGtQWVRoenFxVE5IV3BpdnVKMTExK1FJUlQ0cU9yM3BlMFhYZnBhVjlmOW54cFhzNU9KWDByWHRqZngKRWNyUGlCSlZ4alRCNE5EKzFlckdRQUFrdCtrY3lRWVpid05HUHBQQWZLRFhjZXROQmhwNWlSQ2NQc2ZmZDhweApYNWRuOVZ4ZmQ2U2xLSjVJRHlhWC9VbEQ5QlA5YjJpZW1EZEtEQUN3MTZFS1c1c1RUKzhlN0xNQ2sxR0lPMk9qCmNUakFWZDhtbDJ5UERoc1ZBZ01CQUFHalZqQlVNQTRHQTFVZER3RUIvd1FFQXdJRm9EQVRCZ05WSFNVRUREQUsKQmdnckJnRUZCUWNEQWpBTUJnTlZIUk1CQWY4RUFqQUFNQjhHQTFVZEl3UVlNQmFBRkpkUlBpWTNNUGtoOGFWUgpDTG9aV1YwckhpS0tNQTBHQ1NxR1NJYjNEUUVCQ3dVQUE0SUJBUUMyQUpLMHRlLysxeVlia0FzUHNwWnpwMG9ICk12VEU1UXFKVUZGUFNDdFZLdGxvNEM3OXozdlRhczBEZlhYN21Zek0yc1hRT3RwZUdKRmtpZDNHS0Q5dFpaQmUKVFRIVVRsWERjdkh3eVpkY1F1Mm8zdEhNZVNUMGR1NGw4N1JyNnNlWVZlTzdBRUxEK1VMOEw0eWNFMEZPT0p5aQpHaEZMTFZWSmh0SDByMjgwWTBrR3RPUFdMOVZOVVNLVC9NY0RqYXdKMjBiNWJBVDRyamZJcXR1Zkw4ZjBxYmpkCml1cGc4TmZidVZVYkkrSDBIRE1VSVgvVmlNdFVsRkZMYmkzS0JiNEI4d2lJMXlMcUV5dW0wVWtxV1JnSGFNYjQKdG1QQmpRTy9CalgyOGR6b3l2TFJwQUJ4M2dnRkcrNjBaQTRwUGF6TXhvKzV3WVhtUFlmRWx1ZmoxcjhhCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBdFR0ZXBnSmhlKyt4TDJOUDBOUmVVNlBHVXg4TXoyOHozMitFT1VCS2NzQXZma0o5CjNvQ0VIUWptR09lM0lNNm9ubHRlc3VudFhYSGVvd0htYUZPM1hHL1FwUTF4WGcxeGhFM1o4ait6YzBqWDVzeFYKWnlmWlh4TjVGOURIQk9qYUJrMjMvMXJkRnZFNUQyRTRjNnFrelIxcVlyN2lkZGRma0NFVStLanE5Nlh0RjEzNgpXbGZYL1o4YVY3T1RpVjlLMTdZMzhSSEt6NGdTVmNZMHdlRFEvdFhxeGtBQUpMZnBITWtHR1c4RFJqNlR3SHlnCjEzSHJUUVlhZVlrUW5EN0gzM2ZLY1YrWFovVmNYM2VrcFNpZVNBOG1sLzFKUS9RVC9XOW9ucGczU2d3QXNOZWgKQ2x1YkUwL3ZIdXl6QXBOUmlEdGpvM0U0d0ZYZkpwZHNqdzRiRlFJREFRQUJBb0lCQVFDVkxSWmVmWTB5ai96Lwo3YlpRUmM2UytEY1NTbmVuODNmMlRmdS9pRnova1pSR1VJTDgrRHBIRUFFZXRQVDNTMFJlZlFVNUxmYVZPVnF5ClFLa2diUEI4WlFtUUlyMlRWbXQvSlBubVBtcisxUkhxUjMxdzJNdERTR3Nmb0ZtQmdBMmVyTEJzdjZWRzhpNHUKcTY3ay9xeUNyZGpaQ1JpdE9TZlBJbnY1cGtrcXFJWHE3RTVGSW5qekVsdUFENm04ZUZoTmpuRW9WQ1ZWZ0ppYQo3ZVBvU2Y0c1VPVTI3aW4zU1htM3JxRlNYaGZyc2FXRVh6MUhrQVhxdUFpMWxnVkxWbWtSNk56QUhQN29UVHI3CnZHVmtUemN3a2VweGNkZDFJRTJzb3lOMnIzWlpXdGk0VE82L2UyWEtsR1JMY3Nya014RWhtTktIM1BSaHhESGgKcGpMcWNFemhBb0dCQU0vdUR2WEZnOW1Qd2xEcm8zS0lCdGxQVVZaZVpXa3VHSWJrOThDWDV1ZlUxdm1uaTBKRgppZDFSQ1VLV21zd1EyWFl5OXI0UUZpMjQvMWdCa0toTEFLSHNYb2xnbWc0ZnJ5cWo1YkNXZEc0TVBaK0lKNWttCm9UUGFIbEIzY1B0Nk9JdUw2cGpPWEFzQXJZbjFsUUZXS2ViZVBGa3dESEJMUlB2ZGt3ZHFibWlOQW9HQkFOOGgKUFhqN3cveVVuanVRd0JFNjkwS01IYVdOVUcyUUNlMWM4dGt0YXMrQTFiVzEvM1RkSGtIdGk1L0RQUm5aUlcyaApLb1FuSnEyUllPeHIyeTRZMGIwSWJpbmc5ZzlGcnJXZCtzdjFiMytrY3NsdzlNOVV3Z2IrdUVSMEpvcG1QdHZzClZpcFd2bUJUNHBnSTZTL2VLaUlDeW5rMnhYdUJIeW9JY1pvdzJPNnBBb0dBUTlCQ05Nbk1MS0tFSDF3YW5IbmMKc2ZiNmNnNnJTRmh1UzJCVnBReGxsR3FJQ2pnb1pON0ZEZGNtQy8rT1VNdVdBTVN5VUY1eXZVcStqSGRHTkh6eApvZDJ6SDE3UUg1Y1p5L0JVTXZsKzAzMU9nNzhtR3Y3TVNGcjAxQTJBWGFRSTJRb3k0czg2bWFRSTlSdVJFelNFCnlmVGsvYmw3OVF1M1hlVnYxRlZUMk9rQ2dZRUFrWGFWSWR0WWNNRGV5MHhadXFIcmNtbndKZTZUb1duRzN3UzYKbVZVZmpmbWEyV1MyRHBUYzFmUXNFMUp2OGZzUVpTRXRtNHYramliNXZnZXVrMFhBN25DaGlSSE11RFlnYU94KwpCUnVUdmU0U216clZqcGplQ1R0a0c5UnhEOGNLY2N1SWZQK2lDeUNFMThMdmFySjJXMGZnZ2Rkd05Vei9hU0txCkZQQ0ZiRWtDZ1lFQW5PREt1bFI5Q3ZIaXR2eTlnS1FnaWxzYjBmYThEaGttSElHTGRKRytsSCtESFhiWlJ6Qk0KbUpQbTF4ME95UFI1ZXB4eGtCaHRmRzN1VWRETWRTangyQTlxQkNSeDN6cmZzcDVhRjN5dmg5UFp2Qk85MXNxRQppQms2M1czejNxcUJhcElpNFhaWFhvZmxiL3FWZVlqYjJnL2kvNFUwMk5PS04rUTF1VGQvNUlBPQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

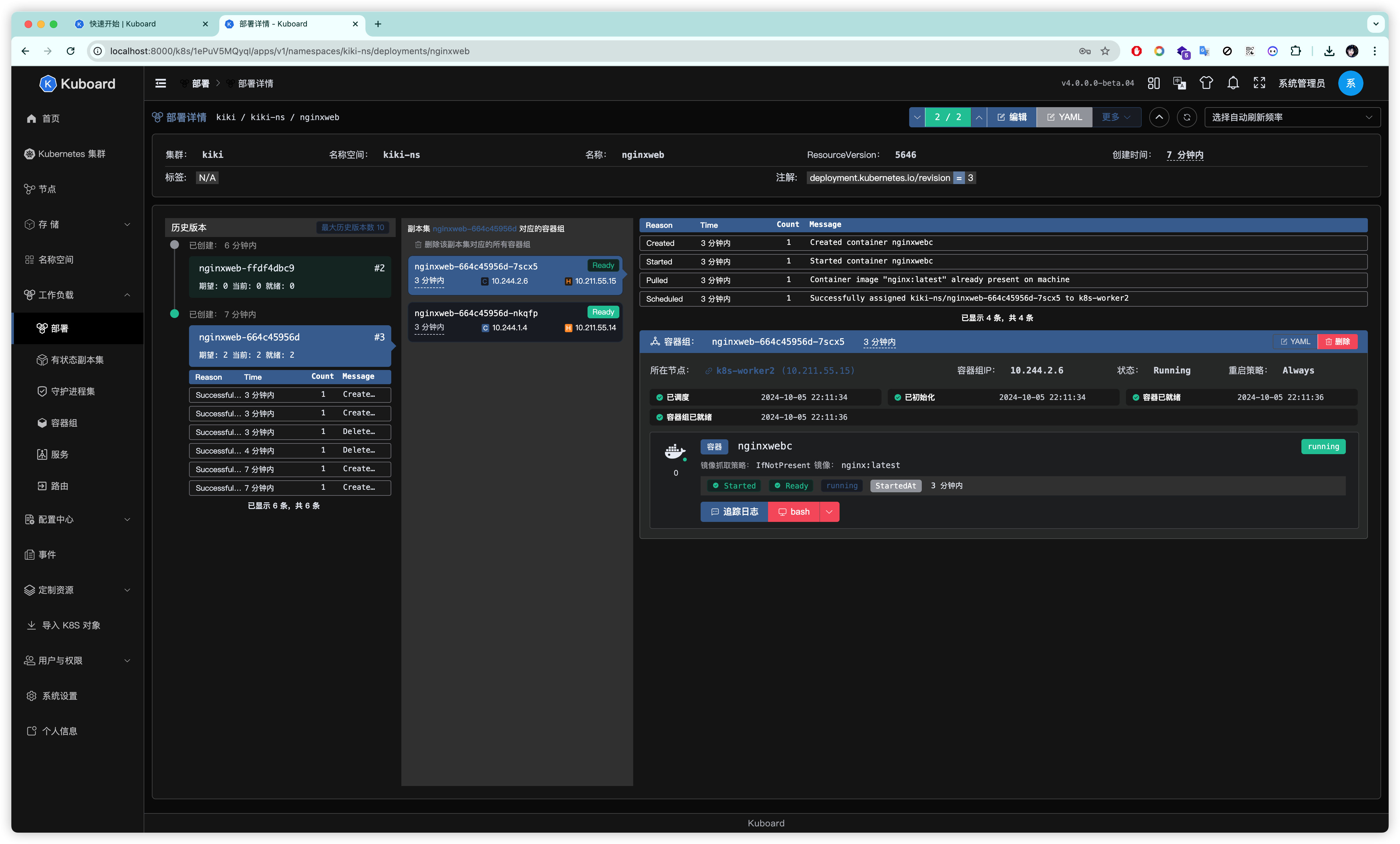

集群测试

创一个测试用的namespace

kubectl create ns kiki-ns

创建一个Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginxweb

namespace: kiki-ns # 指定namespace

spec:

selector:

matchLabels:

app: nginx-kiki # 该Deployment的所有Pod也将带有此标签,该标签用于给Service做选择使用

replicas: 2

template:

metadata:

labels:

app: nginx-kiki

spec:

containers:

- name: nginx-web

image: nginx:1.25.4

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

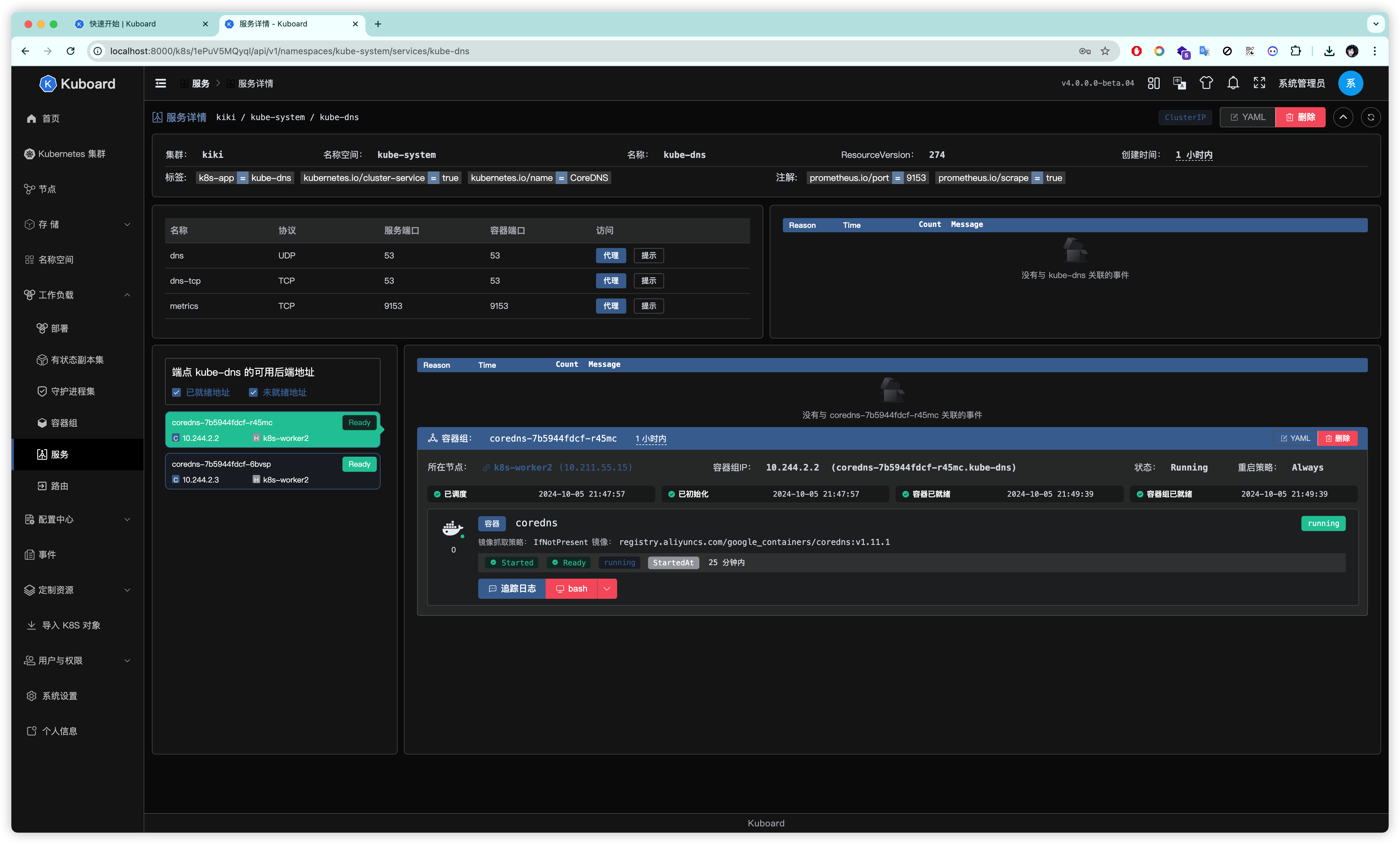

为该Deployment创建Service

apiVersion: v1

kind: Service

metadata:

name: nginx-web-service

namespace: kiki-ns

spec:

externalTrafficPolicy: Cluster

selector:

app: nginx-kiki # 这里所选择的就是我们创建的Deployment下的所有Pod

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30030 # 简单起见,使用NodePort暴露端口,就不使用Ingress了

type: NodePort

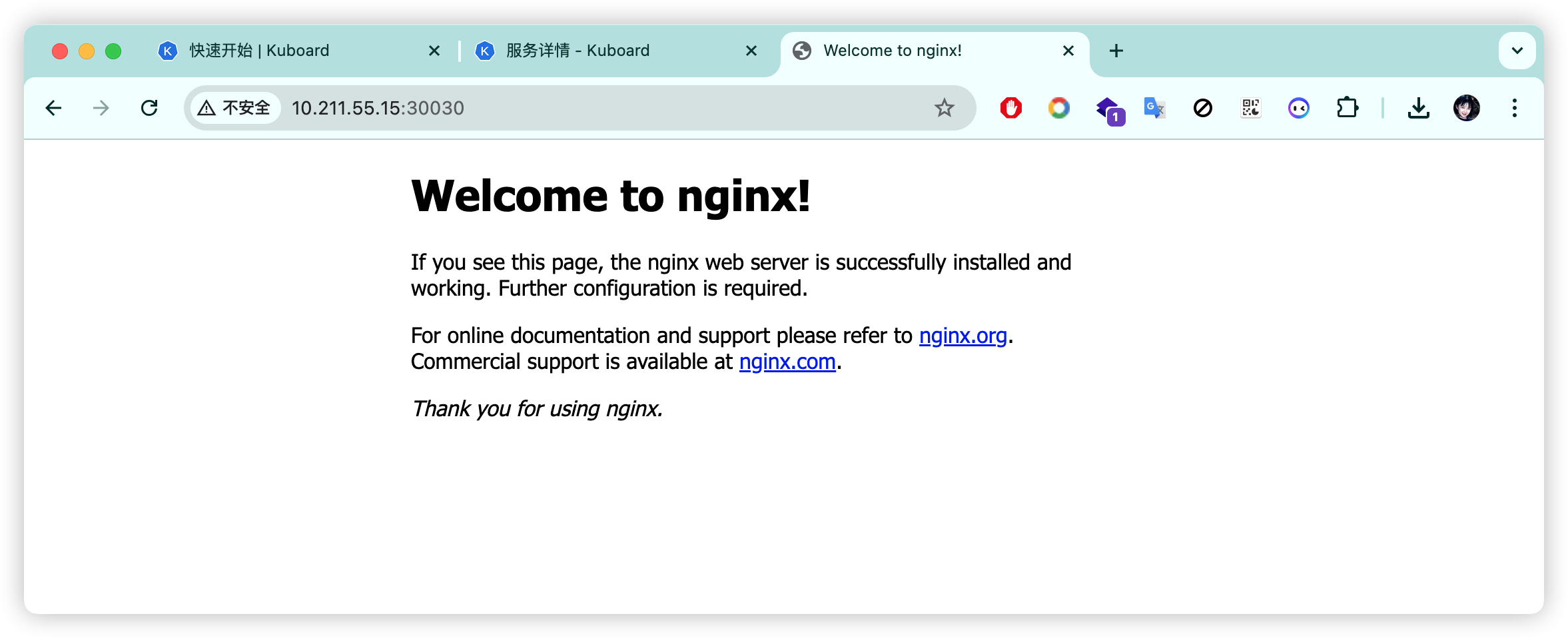

使用任意节点ip:service的nodePort端口访问Service

至此整个集群安装过程完毕,本次主要做个记录方便后续使用